Recon and Info Gathering

“If you know the enemy and know yourself, you need not fear the result of a hundred battles. If you know yourself but not the enemy, for every victory gained you will also suffer a defeat. If you know neither the enemy nor yourself, you will succumb in every battle.”

Disclaimer:

The content shared in this blog is intended for educational purposes only. The techniques, tools, and information discussed are meant to promote responsible and ethical cybersecurity practices. Unauthorized access, misuse, or actions taken using the information provided may violate local laws and regulations, and is strictly prohibited.

The author assumes no liability or responsibility for any harm, misuse, or damage resulting from the application or interpretation of the material in this blog. Readers are encouraged to seek proper authorization before conducting any security assessments and to adhere to all relevant legal and ethical guidelines.

TL;DR

Enumerating the target, application, and domain is arguably the most important step in any engagement.

Introduction

The famous Sun Tzu quote sums up why reconnaissance and information gathering is arguably the most important factor of any kind of engagement. Whether that be a web application, penetration test, or phishing/vishing test. It even applies outside of these scenarios like job shopping, presenting/speaking to audiences, or relationships. If you do not know who or what you are up against, then you have no way to know any strengths or weaknesses that might be present in the target.

This blog will cover recon and info gathering with respects to web applications and bug bounties as the PWPA certification is aimed to be an associate level Web Application Penetration testing.

The main difference between a bug bounty and a penetration test is that in a bug bounty, the impact of the finding is the important factor, whereas in a penetration test, it’s better to report on all things so that the client can better secure their application. Another factor is the timing, bug bounties usually have no time frame compared to a penetration test is timeboxed with a defined start and end date.

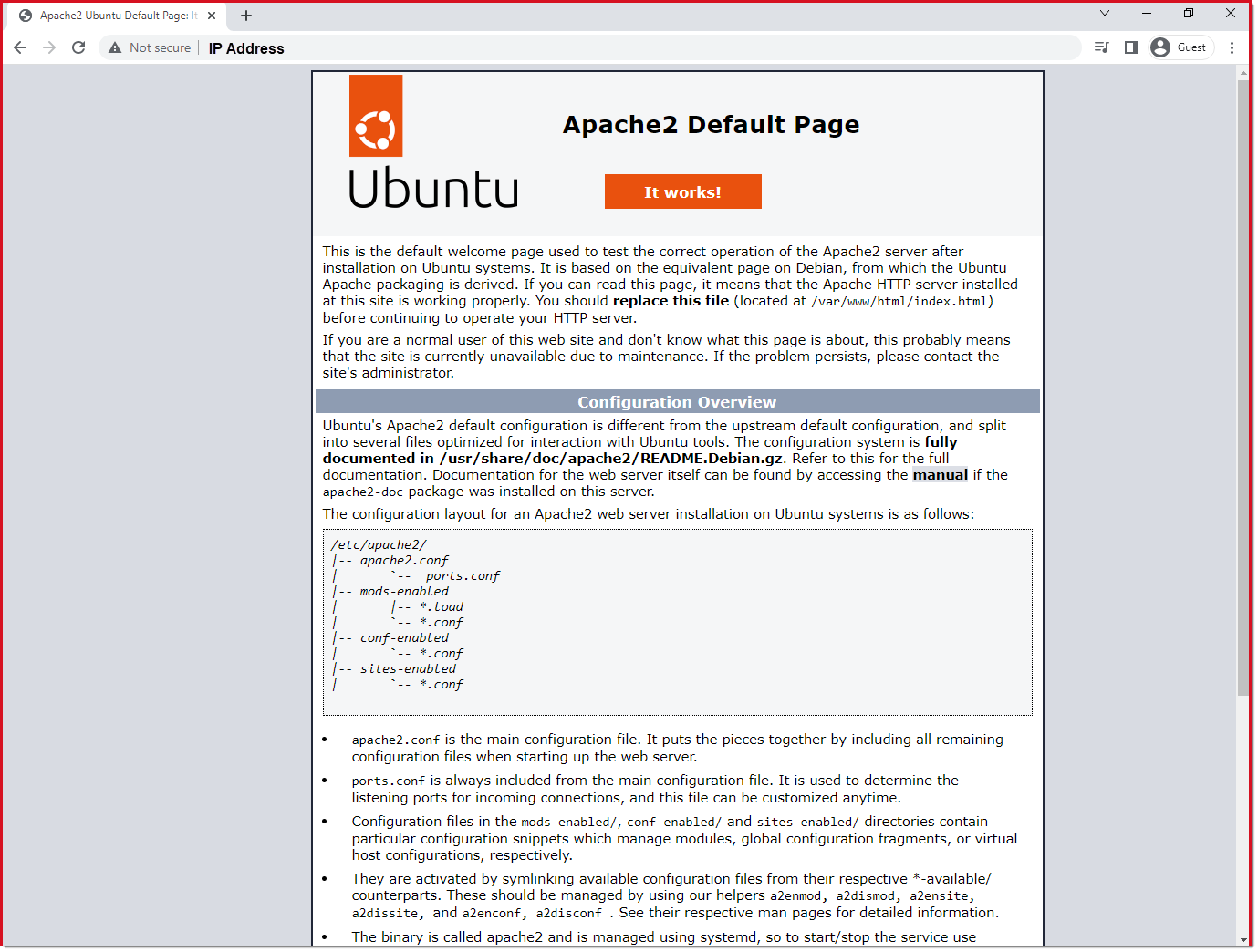

An easy example would be if you found a default webpage like the below:

This is usually not considered in-scope or a valid finding in a bug bounty program, but in a penetration test, it is. Having a default web page accessible to the internet gives a threat actor the information about the web server, but has no real “impact,” to the application or underlying data. In a pentest it is good to call out as it is good cyber hygiene to disable default webpages and prevent unnecessary information disclosure which makes attackers lives that much easier.

Methodology

It is better to understand the methodology behind the different stages of attacking a web application. This is because over time, tools will get updated, archived, or better and newer tools will be created to perform the same methodologies.

My methodology (heavily learned from TCM-Security’s Practical Bug Bounty course for the PWPA: https://academy.tcm-sec.com/p/practical-bug-bounty) starts as follows and the third and fourth steps are usually done in parallel:

Verify the scope of the engagement.

Perform fingerprinting of the web application.

Perform subdomain enumeration.

Perform directory enumeration.

Verify scope

Bug Bounty

Bug Bounties Programs like HackerOne, Intigriti, and BugCrowd have scoping sections. These are the scopes that the companies listed on those platforms have set as the scope and some update them over time.

Usually there is no need to follow-up with the program admins unless you find a subdomain or asset that is neither listed in-scope or out-of-scope.

Penetration Test

Penetration tests are scoped based on the needs of a client/customer. Usually there is an initial call to discuss with the client/customer to understand their goals for the assessment. After the goal has been established, there is generally discussions around the scope and any additional requests that might be needed. Due to penetration tests being time-boxed, it is a good idea to ask relevant questions that can reduce the amount of time required for enumeration.

Fingerprinting

What and why?

This is the act of identifying what technologies the web app is utilizing. This helps us know the versions and tools used so that we can utilize proper payloads in the exploitation phase of the engagement. For example, you identify that an application is utilizing a Postgres database. It would make sense to only attempt to perform SQL injection payloads that would work for Postgres and not a payload that would only work with an Oracle database.

Tools

There are plenty tools and websites that can assist with this stage of recon. Below are some of the ones that the course covers and I feel they work well enough.

Wappalyzer is a browser extension that can be installed in Chrome, Firefox, Edge, and Safari. When you visit a page, you will be able to see the technologies that the website is utilizing and any identified versions.

Another website that you can use is called BuiltWith. Here is a link to the output of this website: https://builtwith.com/innersphere.space

If you want to analyze the headers a webpage is serving, you can use manual curl commands, BurpSuite, or you can use a website like Security Headers that will show all the headers a website is sending.

Here is the security headers link for this site: https://securityheaders.com/?q=https%3A%2F%2Finnersphere.space&followRedirects=on

Subdomain Enumeration

What and why?

This is the act of identifying any additional subdomains that are relevant to the scope. Most of the time, this is relevant to bug bounty programs that have wildcard scopes. A wildcard domain would be something like *.innersphere.space. This indicates that things like www.innersphere.space and dev.innersphere.space are in-scope, but things like www.partner-domain.com is out-of-scope.

For penetration testing engagements, the subdomains are usually provided by the client/customer already and not much enumeration on subdomains is required, but sometimes they will give a wildcard.

For bug bounties, identifying subdomains, such as a development environment that is in-scope, can help you find more vulnerabilities due to development and other subdomains might not have as stringent security measures in place to protect them or they are testing out newer technologies and/or business logic that could be flawed before it goes to production.

Tools

There are a plethora of ways that you can perform subdomain enumeration. Some are CLI-based, and others are web-based.

The course covers things like Google Dorking, crt.sh, subfinder, assetfinder, and amass that will help you with the enumeration.

Google Dorking

This is a fun way to call “filtering” search results being returned from Google, or other, search engines. There are plenty of write-ups, blogs, cheatsheets about dorking like this WikiHow article: https://www.wikihow.com/Google-Dorking-Commands

Any easy one to identify subdomains is the simple site:innersphere.space -www which will tell Google to only give results back for the domain of innersphere.space, but remove any results that have www as the subdomain.

Crt.sh

This is a website that gathers information around all the TLS/SSL certificates for a domain. This includes all information for the certificate, but more importantly, what subdomains the certificates are assigned. Here is the one for the blog https://crt.sh/?q=innersphere.space

Subfinder

This is a CLI tool that is already installed in Kali linux. If it is not installed you can simply install it with:

sudo apt install subfinderThis tool can be called with the like the following:

subfinder -d innersphere.space -o outfile.txtThe -d flag specifies the domain you are searching for and the -o specifies an output file so that you can save your results or something similar.

Assetfinder

You can find this tool and it’s installation/usage here: https://github.com/tomnomnom/assetfinder

This tool is written in go and there is only one flag —subs-only which indicates it to only find sub-domains

Amass

This tool is powerful, but does take some time to run. It was created by the OWASP group as an OSINT gathering tool. There are a lot of different options and tools within the Amass project, but for the intent and purposes of this course, we will only talk about the enum functionality.

It is automatically installed in Kali linux, but if you’re on a different OS or do not have it installed, you can install it using

sudo apt install amassOnce you have it installed, you can simply call

amass enum -d innersphere.spaceIt will then start checking a lot of different sources for subdomains. If you want to learn more about the sources that it checks or the various options you can run, you can read up on its GitHub page here: https://github.com/owasp-amass/amass/blob/master/doc/user_guide.md

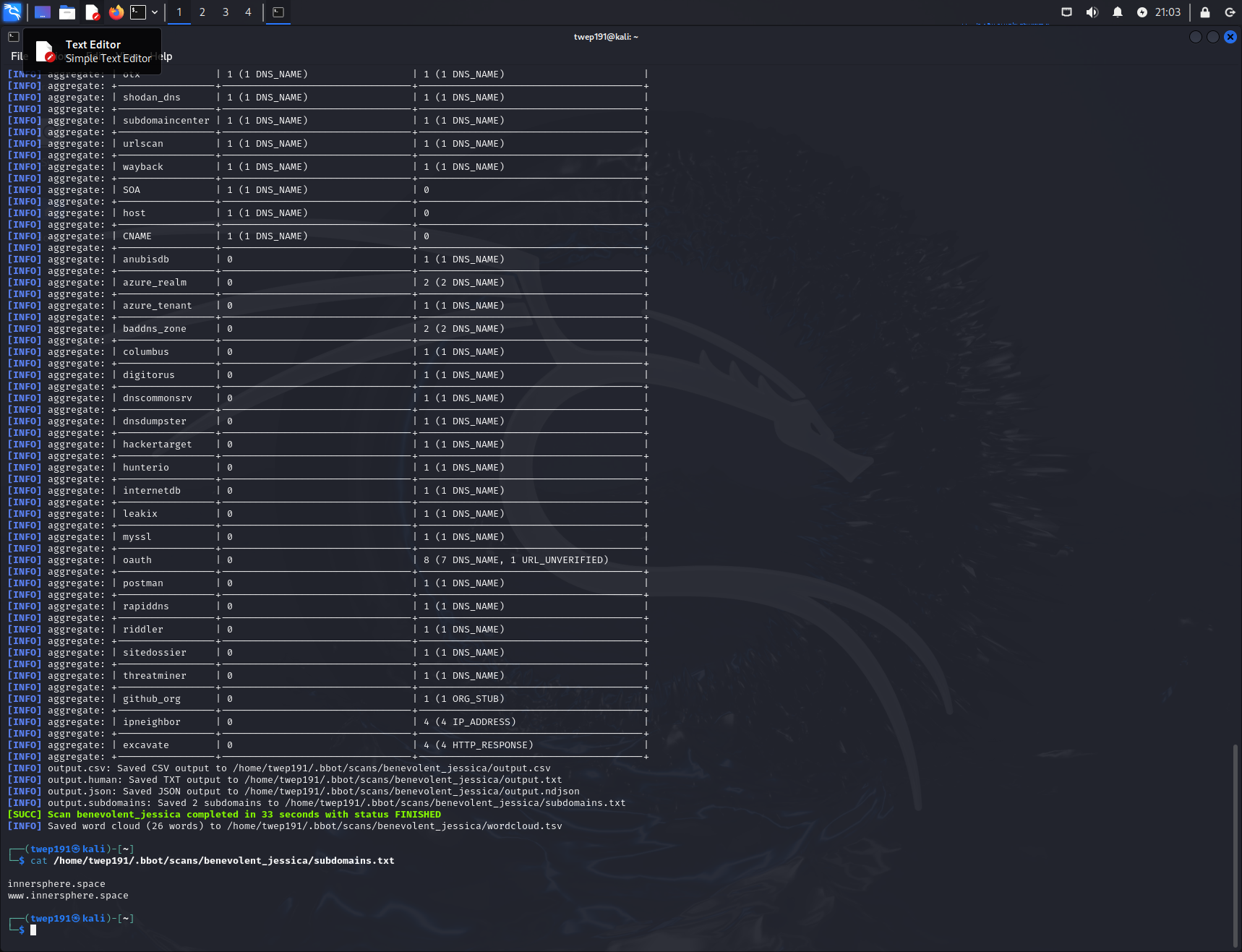

BBOT

This is another really powerful tool that is created and still actively maintained to perform OSINT on your target domains. This tool can also be a course/blog in and of itself. Like Amass, it works a lot better if you have some API keys for the tools that it can utilize. You can find the whole documentation here: https://www.blacklanternsecurity.com/bbot/Stable/

To install it you can run the command:

pipx install bbotAfter that, the very basic command to perform subdomain enumeration would be:

bbot -t innersphere.space -f subdomain-enumThe -f subdomain-enum tells the tool to use all sources that are flagged as subdomain-enum. You will get a print out of all the tools that it will use and information on them.

After you hit enter, it will begin scanning and output results to your terminal as well as into various files.

Finding alive domains

After running the tools and looking at their output files, you will want to identify any domains or subdomains that are alive or dead. You can use a tool called httprobe

You can install it through apt again in Kali as follows:

sudo apt install httprobeOnce you have it installed you can run it against any of your subdomain .txt files for it to identify if they are alive or not. Here is an example command for to run it.

cat /home/twep191/.bbot/scans/benevolent_jessica/subdomains.txt | httprobe -prefer-https | grep https > alive_domains.txtThis command takes the output of the subdomains and sends it to the httprobe tool. The -prefer-https flag tells the tool to only look for HTTPS websites. The output of that tool is then sent to grep to pull out only URLs that start with https and then send it to an output file showing the active domains.

Screengrab the live domains

You might want to gather screenshots of the live domains and subdomains. Luckily there is a tool that can do that for us called gowitness.

Again this tool can be installed into Kali using apt:

sudo apt install gowitnessBefore you run it, you need to ensure that your live subdomain file does not contain the https:// before them for the tool to work properly. You can run this simple sed command to remove them from the file.

sed -e 's/https\:\/\///g' alive_domains.txt > alive_for_gowitness.txtOnce that is completed, you can then run the following command to grab screenshots of all the alive subdomains:

gowitness file -f alive_for_gowitness.txt -P ./innerspherespacescreens --no-httpTo break this command down:

-f tells the tool what file to use for the domains

-P tells the tool where you want to store the screenshots

—no-http tells the tool to not use HTTP and only use HTTPS to access the domains

Directory Enumeration

What and Why?

This is the act of identifying what endpoints (directories and files) exist within the web application. Sometimes there are hidden or vulnerable functionalities hidden in non-published or non-referenced locations within the web application. Enumerating for directories and files will help uncover some of these kinds of tools or files.

This is important in both Bug Bounties and pentest engagements, but with an engagement, this information is occasionally provided ahead of time to reduce the time spent having to enumerate to increase testing the security of the application.

Tools

FFUF

Fuzz Faster U Fool is a very powerful and quick fuzzing tool written in Go to perform directory and/or file enumeration.

Note: The default behavior of this tool is to only perform enumeration on a single level, so if it finds a directory, you will have to create a new fuzz command to do more digging on the newly identified directory.

It can be installed into Kali through apt with the command:

sudo apt install ffufOnce it is installed, you can run a simple directory scan with the following command:

ffuf -w /path/to/wordlist:FUZZ -u https://URL/FUZZ -fc 404To breakdown the command:

-w indicates which wordlist you want to utilize to look for directories or files.

-u indicates which URL you want to target

:FUZZ at the end of the wordlist sets the variable name, so if you wanted to fuzz two different things (like a username and password) you can set different variable names and then set them in the request/URL portion as you see the FUZZ at the end of the URL.

-fc is a flag that will allow you to filter out unwanted responses, like 404 Not Found responses from showing up.

Utilizing a tool like this is usually forbidden in Bug Bounties as they can generate a lot of traffic and potentially DoS the website. A good WAF or application will rate-limit you and prevent your tool getting good results.

For instance, running it against the blog, it will find a few directories, but then will start “identifying” directories that don’t exist with an error code of 403 Forbidden. If try manually navigating, you get a 429 Too many requests response.

Dirb

This is a tool that is pre-installed in Kali. The command to run it is very simple, but the same caveat applies to this tool as with FFUF that it will send a lot of requests and potentially get blocked by a WAF or security tool.

dirb https://URLThis will tell the tool to run and use its default wordlist. If you want to specify a new word list, you can run the following command:

dirb https://url /path/to/wordlistBurp Suite Intruder

Burp Suite’s Intruder tool can also be used in the same manner as ffuf or dirb.

Conclusion

If you made it this far, congratulations! You were able to scroll through my word spaghetti around recon and information gathering. Hopefully the information was helpful and not too confusing.

As always, comments are welcome, keep them civil and on-topic.